AMD’s hardware teams have tried to redefine AI inferencing with powerful chips like the Ryzen AI Max and Threadripper. But in software, the company has been largely absent where PCs are concerned. That’s changing, AMD executives say.

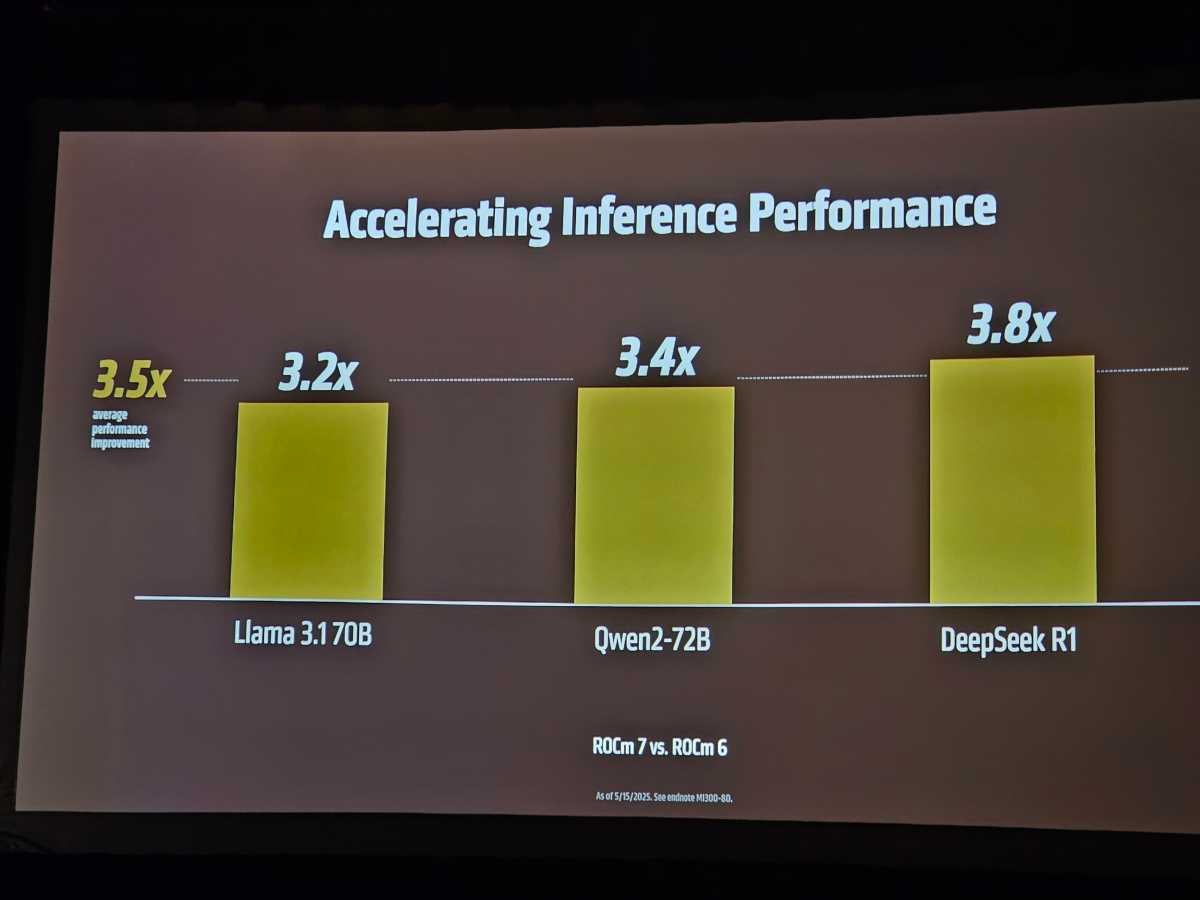

AMD’s Advancing AI event Thursday focused on enterprise-class GPUs like its Instinct lineup. But it’s a software platform you may not have heard of, called ROCm, that AMD depends upon just as much. AMD is releasing ROCm 7 today, which the company says can boost AI inferencing by three times through the software alone. And it’s finally coming to Windows to battle Nvidia’s CUDA supremacy.

Radeon Open Compute (ROCm) is AMD’s open software stack for AI computing, with drivers and tools to run AI workloads. Remember the Nvidia GeForce RTX 5060 debacle of a few weeks back? Without a software driver, Nvidia’s latest GPU was a lifeless hunk of silicon.

Early on, AMD was in the same pickle. Without the limitless coffers of companies like Nvidia, AMD made a choice: it would prioritize big businesses with ROCm and its enterprise GPUs instead of client PCs. Ramine Roane, corporate vice president of the AI solutions group, called that a “sore point:” “We focused ROCm on the cloud GPUs, but it wasn’t always working on the endpoint — so we’re fixing that.”

Mark Hachman / Foundry

In today’s world, simply shipping the best product isn’t always enough. Capturing customers and partners willing to commit to the product is a necessity. It’s why former Microsoft CEO Steve Ballmer famously chanted “Developers developers developers” on stage; when Sony built a Blu-ray drive into the PlayStation, movie studios gave the new video format a critical mass that the rival HD-DVD format didn’t have.

Now, AMD’s Roane said that the company belatedly realized that AI developers like Windows, too. “It was a decision to basically not use resources to port the software to Windows, but now we realize that, hey, developers actually really care about that,” he said.

ROCm will be supported by PyTorch in preview in the third quarter of 2025, and by ONNX-EP in July, Roane said.

Presence is more important than performance

All this means is that AMD processors will finally gain a much larger presence in AI applications, which means that if you own a laptop with a Ryzen AI processor, a desktop with a Ryzen AI Max chip, or a desktop with a Radeon GPU inside, it will have more opportunities to tap into AI applications. PyTorch, for example, is a machine-learning library that popular AI models like Hugging Face’s “Transformers” run on top of. It should mean that it will be much easier for AI models to take advantage of Ryzen hardware.

ROCm will also be added to “in box” Linux distributions, too: Red Hat (in the second half of 2025), Ubuntu (the same) and SuSE.

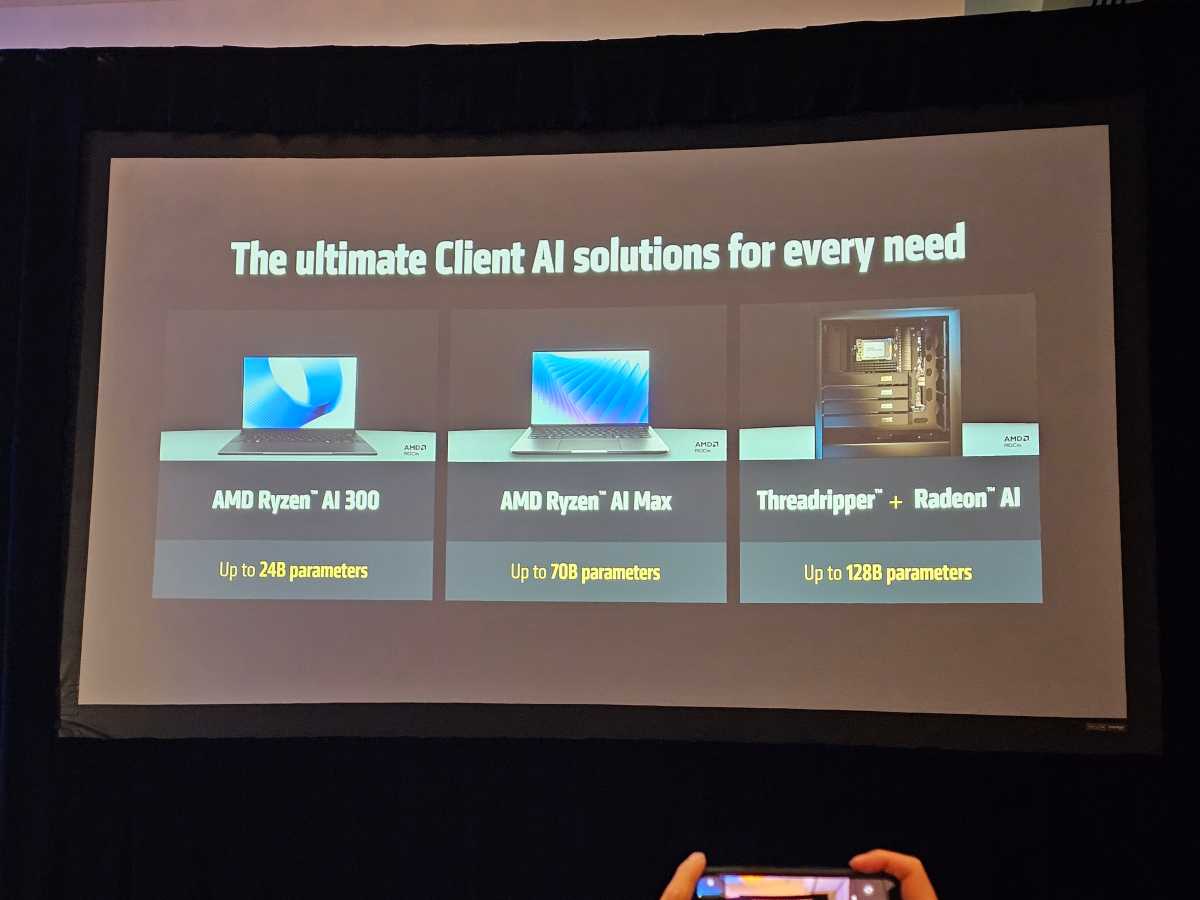

Roane also helpfully provided some context over what model size each AMD platform should be able to run, from a Ryzen AI 300 notebook on up to a Threadripper platform.

Mark Hachman / Foundry

…but performance substantially improves, too

The AI performance improvements that ROCm 7 adds are substantial: a 3.2X performance improvement in Llama 3.1 70B, 3.4X in Qwen2-72B, and 3.8X in DeepSeek R1. (The “B” stands for the number of parameters, in billions; the higher the parameters, the generally higher the quality of the outputs.) Today, those numbers matter more than they have in the past, as Roane said that inferencing chips are showing steeper growth than processors used for training.

(“Training” generates the AI models used in products like ChatGPT or Copilot. “Inferencing” refers to the actual process of using AI. In other words, you might train an AI to know everything about baseball; when you ask it if Babe Ruth was better than Willie Mays, you’re using inferencing.)

Mark Hachman / Foundry

AMD said that the improved ROCm stack also offered the same training performance, or about three times the previous generation. Finally, AMD said that its own MI355X running the new ROCm software would outperfom an Nvidia B200 by 1.3X on the DeepSeek R1 model, with 8-bit floating-point accuracy.

Again, performance matters — in AI, the goal is to push out as many AI tokens as quickly as possible; in games, it’s polygons or pixels instead. Simply offering developers a chance to take advantage of the AMD hardware you already own is a win-win, for you and AMD alike.

The one thing that AMD doesn’t have is a consumer-focused application to encourage users to use AI, whether it be LLMs, AI art, or something else. Intel publishes AI Playground, and Nvidia (though it doesn’t own the technology) worked with a third-party developer for its own application, LM Studio. One of the convenient features of AI Playground is that every model available has been quantized, or tuned, for Intel’s hardware.

Roane said that similarly-tuned models exist for AMD hardware like the Ryzen AI Max. However, consumers have to go to repositories like Hugging Face and download them themselves.

Roane called AI Playground a “good idea.” “No specific plans right now, but it’s definitely a direction we would like to move,” he said, in response to a question from PCWorld.com.